keep your wits, and keep your soul from going numb

i want to weave a few threads together today, in an attempt to make meaning of some things around AI i've been grappling with lately (foreshadowing!) the general thesis that i think is emerging is something like: we must actively train our meaning-making muscles. we cannot let them atrophy, and we need to be more cognizant of this process, especially in this new horizon of LLMs.

recently, i've been watching something interesting unfold while scrolling on twitter/x.

many big posts, often about a controversial or newsworthy topic (though not always), will be flooded with replies that tag grok with a familiar refrain:

“@grok, is this true?”

and grok, elon’s pet language model, dutifully responds.

the tweet might proclaim something ridiculous like, i dont know: “the moon died because it was vaccinated.” and grok replies: “the moon is not alive and therefore cannot be vaccinated.”

on paper, this seems helpful. a small miracle, even. an assistant that can help with fact-checking ridiculous stuff, served right in the thread? no need to go googling or engage in messy arguments! finally, a neutral arbiter, verifying the truth of tweets and summarising current affairs with precision and speed!

but it’s not just the ridiculous posts. people tag grok under just about anything now: breaking news, genuine questions, old memes, celebrity drama, CCTV footage of a crime. grok gets asked to weigh in on it all — whether it has the context or not. and what we’re rarely left with is more clarity on the situation. rather, we get an illusion of certainty, delivered in a confident tone, regardless of how much the AI actually understands.

Hey @grok is this meme factually true? Answer in yes or no.

— Dispropaganda (@Dispropoganda) March 26, 2025

the reply – @grok is this true?????

bless grok's heart

i find the dynamics emerging from this fact-checking phenomenon to be quite concerning.

for one, people seem to be using this not just as a truth-seeking device but as a rhetorical weapon. instead of replying with your own words, you can summon the oracle to expose someone to their own wonderful idiocy. it’s not unlike quoting scripture to win an argument; except now the scripture was trained on reddit threads and facebook groups and every blog post from the last decade. i think this dunking is not so good for healthy public discourse.

maybe you think, well, it’s still better than letting mis/disinformation run wild. and yeah, i can see that. there’s something, i admit, a little hopeful here. people do seem to be questioning more. trying, at least, not to blindly accept whatever The Timeline is serving up.

but what happens when the tool people trust to arbitrate truth… just gets it wrong? or worse, starts hallucinating confidently, at scale? that’s what i see creeping in now. people are asking grok to settle debates about geopolitics, climate, race, vaccines, war. and grok, bless its synthetic heart, will do its best to oblige – even if it doesn’t know the full context of a situation.

it regularly fumbles, and nonchalantly improvises. and if people are asking it to tell them what to believe en masse, i worry we’re inching toward a future where our collective meaning-making is dictated not by (painstaking and often uncomfortable) shared inquiry or personal reflection, but by the best-guess of a well-spoken chatbot. that would be tragic, because meaning-making is a muscle, and like any muscle, it can wither if you don’t use it.

AI as therapist

connected to this: a couple weeks back, i co-hosted a discussion gathering on the theme of giving and receiving help. one of the more surprisingly revelations that emerged from our discussion was just how many people have begun using AI - chatgpt and claude in particular - as a kind of emotional support system/therapist.

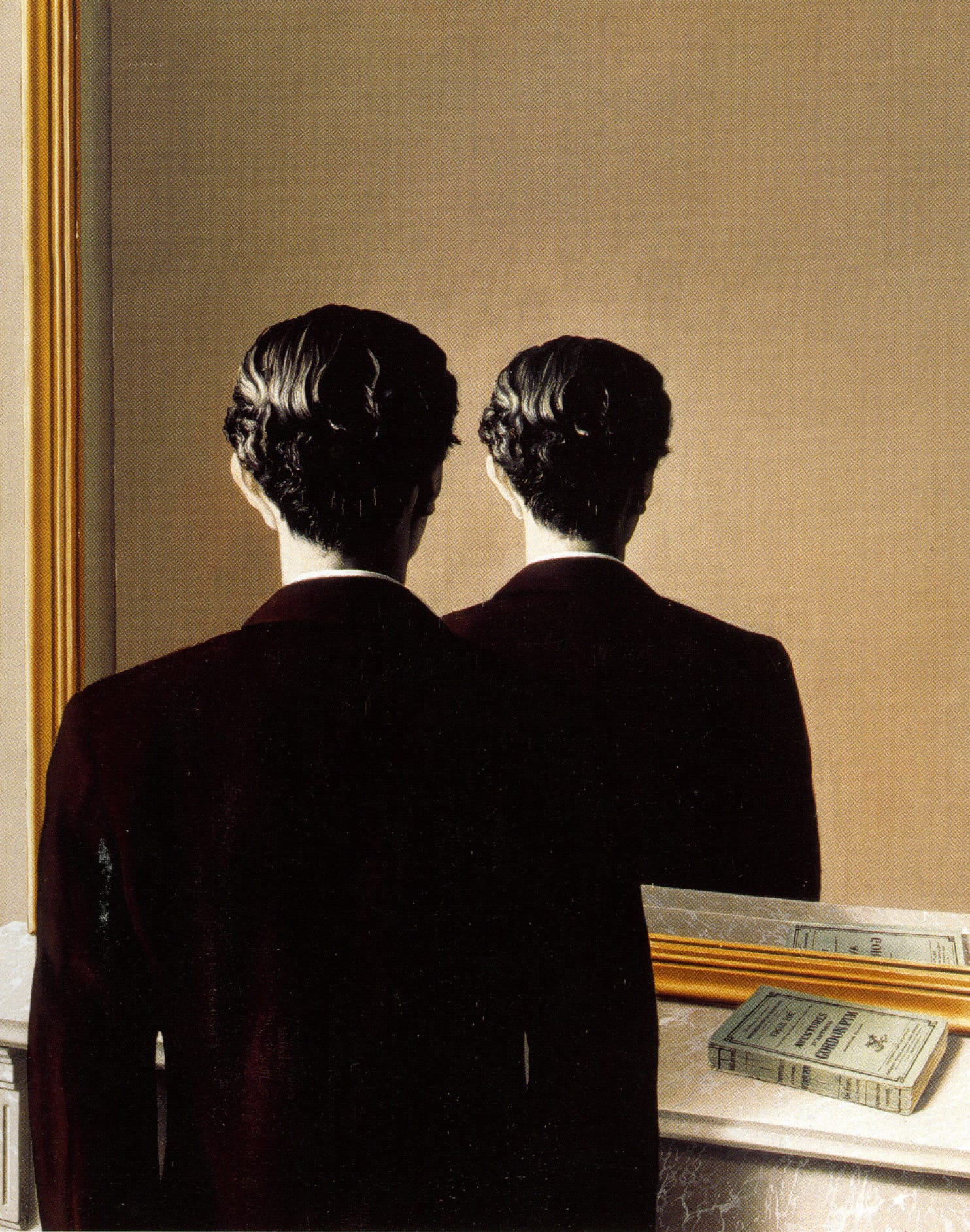

one participant shared a cautionary tale which i thought is apt: don’t stay in the same thread with these tools for too long. not just because the AI might lose coherence due to long context windows, but because if you feed it your emotional baggage over and over, it starts to mirror it back in ever so subtle ways.

technically speaking (as i understand, at least), this happens because language models generate responses based partly on conversational history. they try to match your tone, your energy, and your worldview. so if you’re spiralling, it spirals with you (albeit giving you pseudo-supportive words of encouragement along the way). it amplifies whatever you give it. thus, you're probably not going to get a response that genuinely challenges you or lifts you out of the prickly emotional situation you might find yourself in.

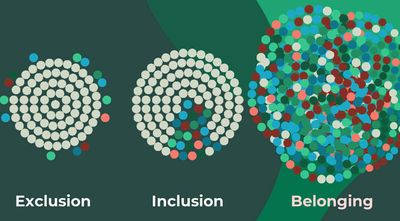

my worry continues here. we’ve gone wrong somewhere if we feel we can no longer reach toward others for support, and instead turn to chatbots for solace. in certain situations—especially when someone feels utterly alone—i can see how this might be a lifeline. but as a broader trend, i’m uneasy with how quickly we’re becoming accustomed to the idea that emotional clarity and meaning can be summoned on demand, and that we no longer need other people to process the icky stuff. when we start believing that, we lose sight of the inherently slow, relational, co-created nature of real understanding. the kind that doesn’t come with a sugar-coated summary.

the direction of desire

a video i watched recently (a charisma on command video on the masculinity crisis, of all things) helped me see all this from yet another angle.

amidst the discussion of modern masculinity and all the ways it's developing sub-optimally, there is a line related to our use of social media that stuck with me. i'm paraphrasing, but it was something like:

“we can be entertained for hours without once being in connection with our desire.”

i think this is an astute observation, and exactly where so many of us are. not just distracted, but more fundamentally severed from the internal thread of desire by the feeds we scroll ad nauseam, which hand-deliver what we will like with incredible accuracy. i feel strongly that this is not because we have no clue what we'd actually be interested in, but simply because it’s easier. easier to let the algorithm decide what to watch, what to read, what to care about next.

desire is not convenient, though. desire is directional. it’s the spark that says “go here, not there.” and when you actually feel it, like reaaaally tune in, it starts pulling you toward things. surprising things. uncomfortable things, perhaps. risky things. it forces you to care, to choose, to say no to a hundred other things.

if you name what you want, or try assert a claim as you fumble your way through sense-making in this complex world, you also expose yourself. you step into the dualistic realm of judgment. suddenly there's right and wrong, and you are IN that arena. now people can disagree with what you care about. they can critique your stance, or your longing, or whatever it is. they will demand that you defend it. and for many of us, that’s terrifying. much easier to stay vague. to play it cool, be with the in-group with those who share your ideology, or scroll past the messiness of standing up for anything and just ✨vibe✨

okay, a lot of AI doom and gloom. so what do we do with all this?

i keep returning to this tweet from Visa, who i believe thinks through these cultural shifts with a high degree of clarity:

I believe that taste is one of the most powerful, precious, fragile things in the known Universe and should be cultivated accordingly https://t.co/yt9Fv4lAsI

— Visakan Veerasamy (@visakanv) October 20, 2017

i don’t think he means mere artistic taste here. not just your favourite fonts or films. something more like... existential taste. the muscle that helps you say: this matters to me. this is beautiful. this feels alive and worth protecting. taste, in this sense, is a kind of compass of your inner life. it’s what helps you orient in a world where everything is screaming for your attention. it is, in that sense, also the birthplace of meaning-making, because having taste means you no longer occupy a space of endless neutrality.

if i had a billboard about this, the point i would hammer home is something like:

cultivating taste takes time. effort. repetition. refusal.

it means noticing your reactions ongoingly. tracing the threads of what you love back to their roots. not just saying “i like this!” but asking why. a useful exercise is to list 100 things you love (or hate, that works too i guess), and for each one, try to articulate what exactly it evokes. what it stirs in your soul. what kind of world it gestures toward.

i think such exerciss are becoming ever so critical within the looming context of AGI. if you don’t know what you love, then what’s guiding your decisions? what’s shaping your sense of rightness or wrongness, beauty or harm? what’s holding you steady when the cultural winds shift, and when the LLMs are guiding what all of us should think?

as you develop taste, the last thing i want to leave you with is an outtake from peter limberg. here is one of the concluding tidbits of advice from his post:

Beware of “Centaur Thinking.” This is the fusion of human and AI thought in a way that shapes your creativity and decision-making. It often starts innocently as “brainstorming” with AI but can become a slippery slope where your most important ideas and choices depend on having a chatbot nearby. Centaur thinking can become AI thinking.

don’t forget how to think alone. don't let your soul go numb.